"A riveting story of what it means to be human in a world changed by artificial intelligence, revealing the perils and inequities of our growing reliance on automated decision-making"

People have started forming relationships with AI systems as friends, lovers, mentors, therapists, and teachers, with these "sycophantic" companions "optimized to suit the precise preferences of whoever [they are] interacting with", write Robert Mahari, a joint JD-PhD candidate at the MIT Media Lab and Harvard Law School, and Pat Pataranutaporn, a researcher at the MIT Media Lab, in the MIT Technology Review.

We conducted a quick, initial analysis of six AI-based service platforms for hosting and analysing UX research: HeyMarvin, Lookback, AddMaple, Reveal, Outset, and Voicepanel. It brought up more questions than answers.

The latest issue of Interactions magazine, published by ACM, contains five feature stories, four of which are about AI and interaction design.

When we make decisions, our thinking is informed by societal norms, “guardrails” that guide our decisions, like the laws and rules that govern us. But what are good guardrails in today’s world of overwhelming information flows and increasingly powerful technologies, such as artificial intelligence? Based on the latest insights from the cognitive sciences, economics, and public policy, Guardrails offers a novel approach to shaping decisions by embracing human agency in its social context.

Practitioners praise some efficiency gains in process tasks, but are skeptical about the real value in analysis and insight gathering, despite the many marketing claims.

Cameron Hanson, Strategy Director at Smart Design, gave a presentation at the the Service Design Network (SDN) New York Chapter on practical ways to integrate GenAI into the design research process.

Proceedings of the 15th Biannual Conference of the Italian SIGCHI Chapter

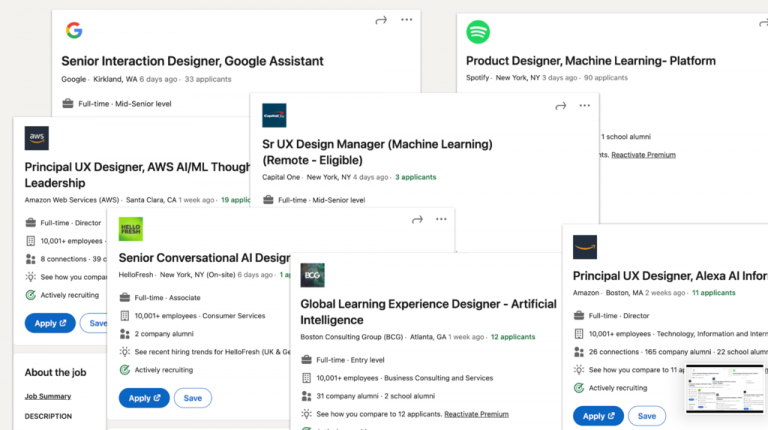

"UX professionals must seize the AI career imperative or become irrelevant", writes Jakob Nielsen in his blog UX Tigers, particularly with current AI-driven tools being "far from user-friendly with their clunky, prompt-driven interfaces", and with adult (digital) literacy being what it is.

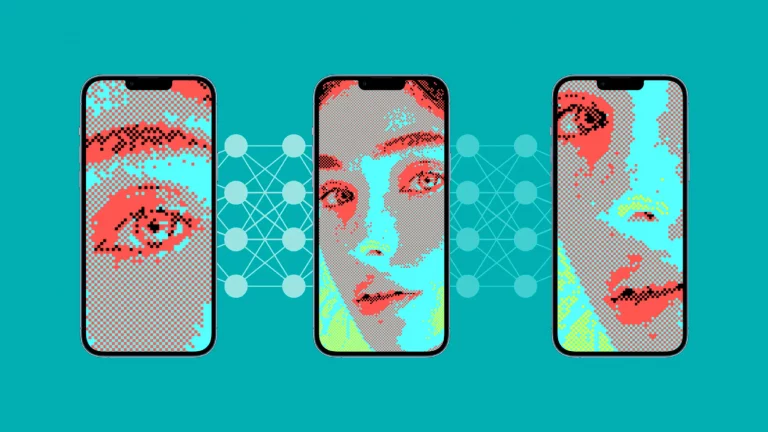

If you're a non-native English writer, you should know GPT detectors are biased against you.

10 conversations by Urban AI, a Paris based think tank, with worldwide experts to explore the future of urban artificial intelligence

The book sheds new light on some of the most important themes in AI ethics, from the differences between Chinese and American visions of AI, to digital neo-colonialism. It is an essential work for anyone wishing to understand how different cultural contexts interplay with the most significant technology of our time.

A new area called "human-centered machine learning" (HCML) promises to balance technological possibilities with human needs and values. However, there are no unifying guidelines on what "human-centered" means, nor how HCML research and practice should be conducted.

This article by Stevie Chancellor in Communications of the ACM draws on the interdisciplinary history of human-centered thinking, HCI, AI, and science and technology studies to propose best practices for HCML.

This report by the IndustryLab of Ericsson, the Swedish multinational, aims to introduce the ethics of AI and explore how this fast-growing technology needs to align with humans’ moral and ethical principles if it is to be embraced by society at large.

Hal Wuertz, Adam Cutler and Milena Pribic of IBM Design defined a unique set of five skills for “AI Design.”

At the "AI in the Loop: Humans in Charge" conference, which took place Nov. 15 at Stanford University, panelists proposed a new definition of human-centered AI – one that emphasizes the need for systems that improve human life and challenges problematic incentives that currently drive the creation of AI tools.

Bringing together a motley crew of social scientists and data scientists, the aim of this special theme issue of Big Data & Society is to explore what an integration or even fusion between anthropology and data science might look like.

“All research is qualitative; some is also quantitative”

Harvard Social Scientist and Statistician Gary King

From transforming the ways we do business and reimagining health care, to creating planet-restoring housing and humanizing our digital lives in an age of AI, Expand explores how expansive thinking across six key areas—time, proximity, value, life, dimensions, and sectors—can provide radical, useful solutions to a whole host of current problems around the globe.

Best practices for addressing the bias and inequality that may result from the automated collection, analysis, and distribution of large datasets.