Artificial Intelligence is permeating a wide range of areas and it is bound to transform work and society. This dossier asks what needs to be done politically in order to shape this transformation for the sake of the common good.

New worlds need new language. TOne of those things to name is what is happening to ourselves and our data proxies. Expanding our language from privacy to personhood enables us to have conversations that enable us to see that our data is us, our data is valuable, and our data is being collected automatically.

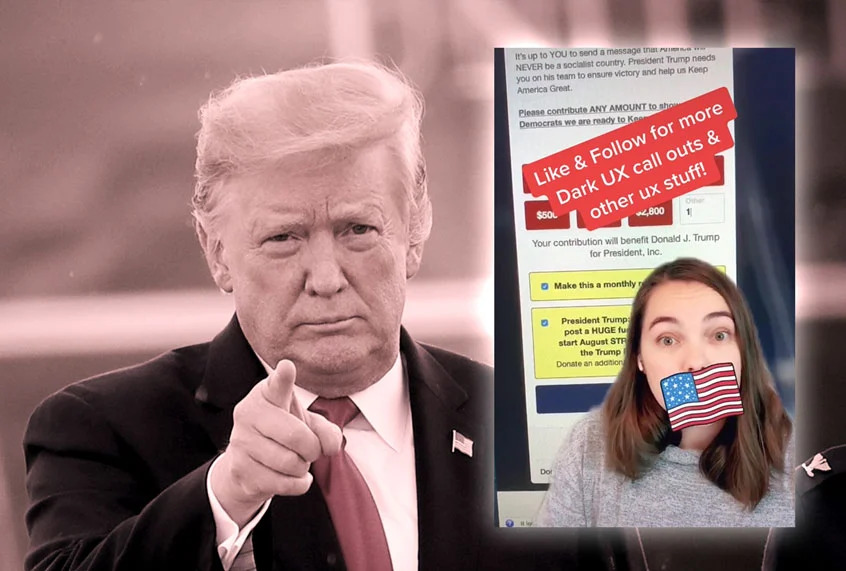

While UX designers are trained to be on the side of the user, there are ways that the user experience can be manipulated to be in favor of the "product" in this case, a candidate. UX designer Mary Formanek broke down how this worked in an interview with Salon.

The book explores the future of artificial intelligence (AI) through interviews with AI experts and explores AI history, product examples and failures, and proposes a UX framework to help make AI successful.

This article argues [that] the well-publicized social ills of computing will not go away simply by integrating ethics instruction or codes of conduct into computing curricula. The remedy to these ills instead lies less in philosophy and more in fields…

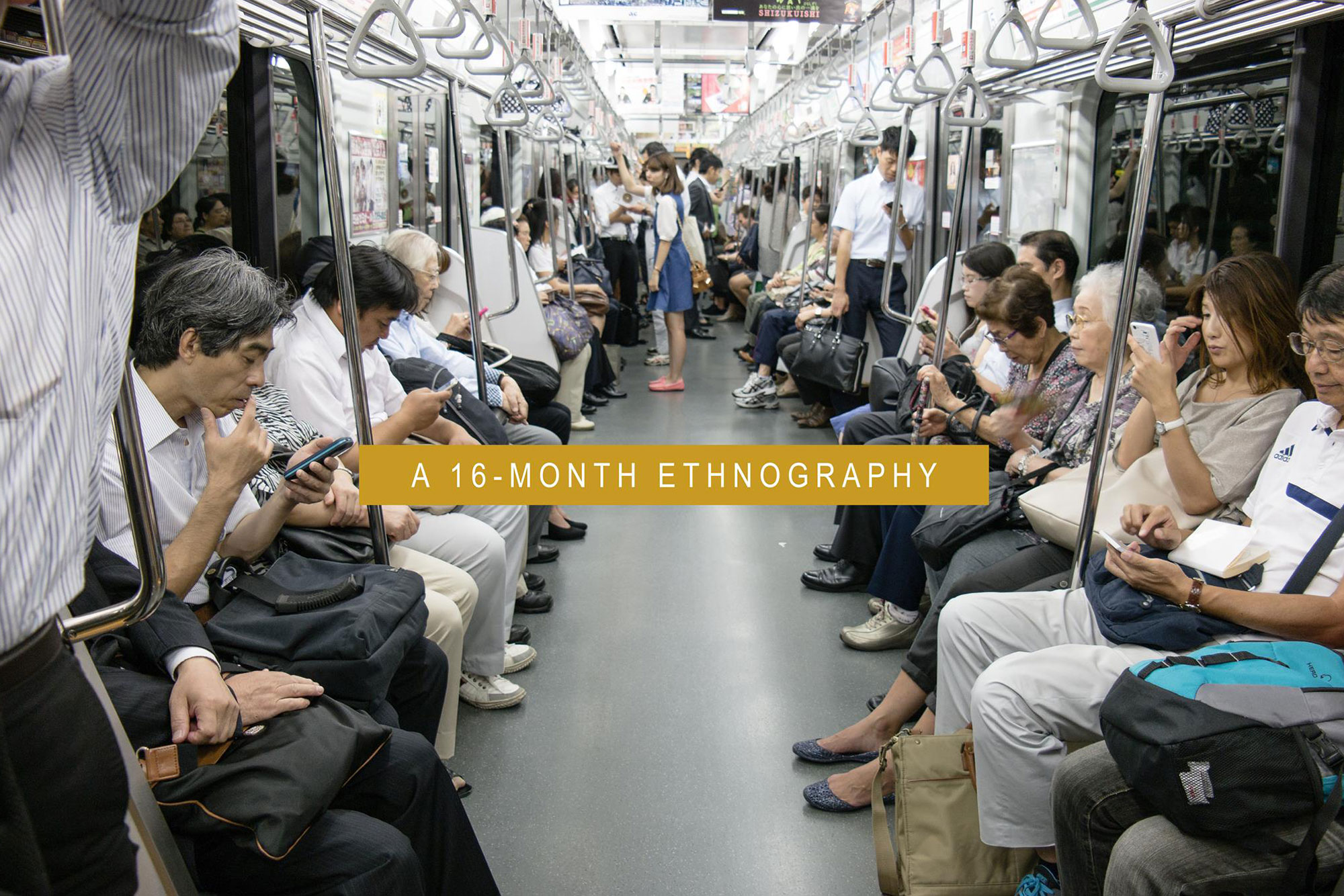

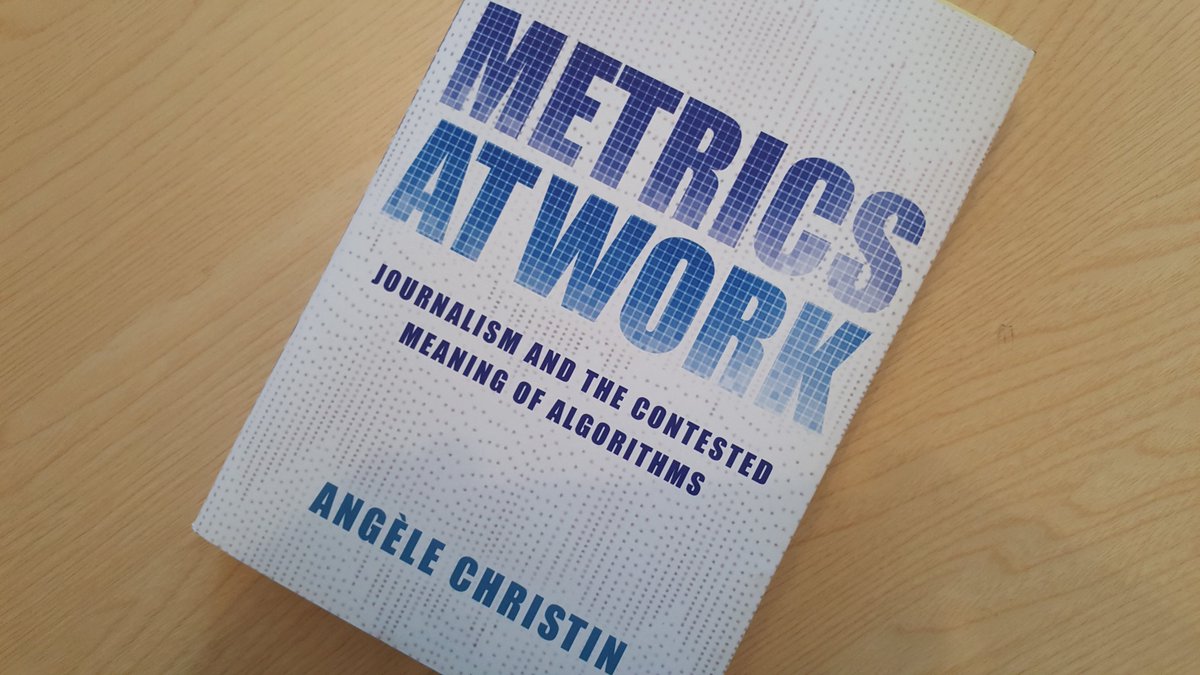

Angèle Christin argues that we can

explicitly enroll algorithms in ethnographic research, which can shed light on unexpected aspects of algorithmic systems - including their opacity. She delineates three mesolevel strategies for algorithmic ethnography.

The starkly different ways that American and French online news companies respond to audience analytics and what this means for the future of news.

While it's easy to blame the user, phishing schemes have become incredibly sophisticated and believable. So, instead of blaming the user, we want to instead bring an empathetic lens, and understand more about their needs.

Sur la base d'une enquête de terrain menée à Genève, Los Angeles et Tokyo, cet ouvrage aborde la dimension proprement anthropologique du smartphone.

AI is poised to disrupt our work and our lives. We can harness these technologies rather than fall captive to them - but only through wise regulation.

In our data-driven society, it is too easy to assume the transparency of data. Instead, we should approach data sets with an awareness that they are created by humans and their dutiful machines, at a time, in a place, with the instruments at hand, for audiences that are conditioned to receive them, says Yanni Alexander Loukissas, Assistant Professor of Digital Media in the School of Literature, Media, and Communication at Georgia Tech.

Carissa Véliz is a philosopher and ethicist who works on digital ethics, practical ethics more generally, political philosophy, and public policy

This special issue of the Journal of Digital Social Research collects the confessions of five digital ethnographers laying bare their methodological failures, disciplinary posturing, and ethical dilemmas.

The Brussels-based digital participation platform CitizenLab asked 12 digital democracy experts to share their predictions on the future of digital democracy

The psychologist Amy Orben talks about the widespread fear that smartphones are harmful to our wellbeing - and the difficulty of proving it

Smartphone attachment is so prevalent that the fear of being without a phone has a name: nomophobia, writes Elizabeth Churchill in Interactions. What can be done to manage such unhealthy attachments?

CES indicates we're still a far way off seeing technology for the home that genuinely fosters our sense of comfort, wellbeing and community.

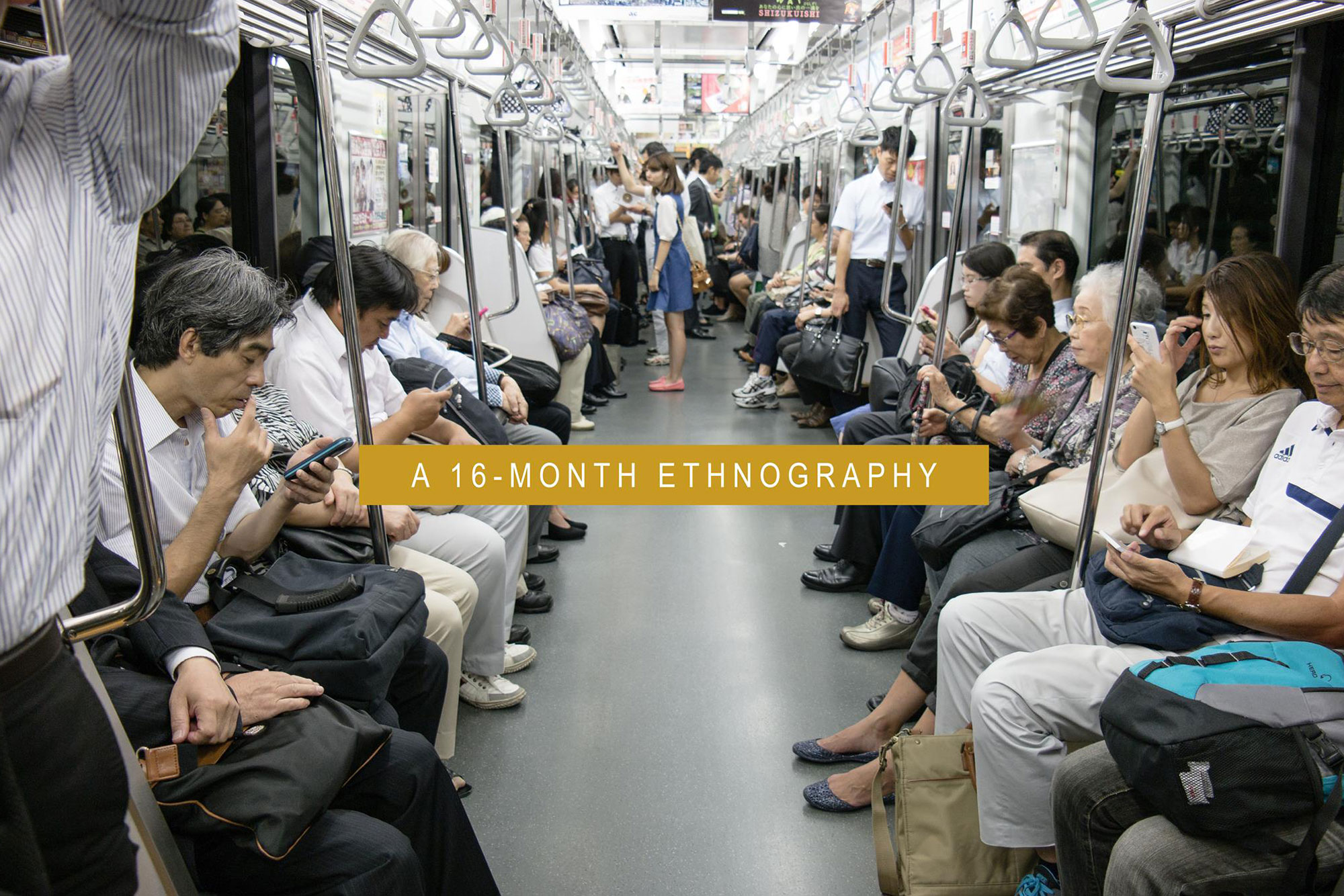

The Anthropology of Smartphones and Smart Ageing, a multi-sited research project based at UCL Anthropology, employs a team of 11 anthropologists conducting simultaneous 16-month ethnographies in Ireland, Italy, Cameroon, Uganda, Brazil, Chile, Al-Quds, China, and Japan.

In this special issue, the New York Times Magazine has tried to see the internet and its likely future as best as they can, in the hope that - after decades as imagining it as a utopia, and then a few years as seeing it as a dystopia - we might finally begin to see it for what it is, which is a set of powerful technologies in the midst of some serious flux.

The central charge to HCI is to nurture and sustain human dignity and flourishing. Why are HCI researchers and practitioners now on the wrong side of many of the problematic developments in the contemporary technology landscape?